LLM Evaluation

How do you know which LLM to use for your project? A vibe check?

When a new language model (LLM) is released, everyone is eager to try it out, generate text, and see its performance on various tasks. But how do you choose the right language model for your specific project?

Here’s my approach before considering an LLM for a project:

These resources help you filter out models that don’t perform well on the tasks you’re interested in. Once you have a shortlist of models, you can evaluate them on a custom dataset using specialized metrics. Let’s learn how!

Tutorial Goals

In this tutorial you will:

- Understand why classical ML metrics don’t work for LLMs

- Use a variety of metrics to evaluate models for your project

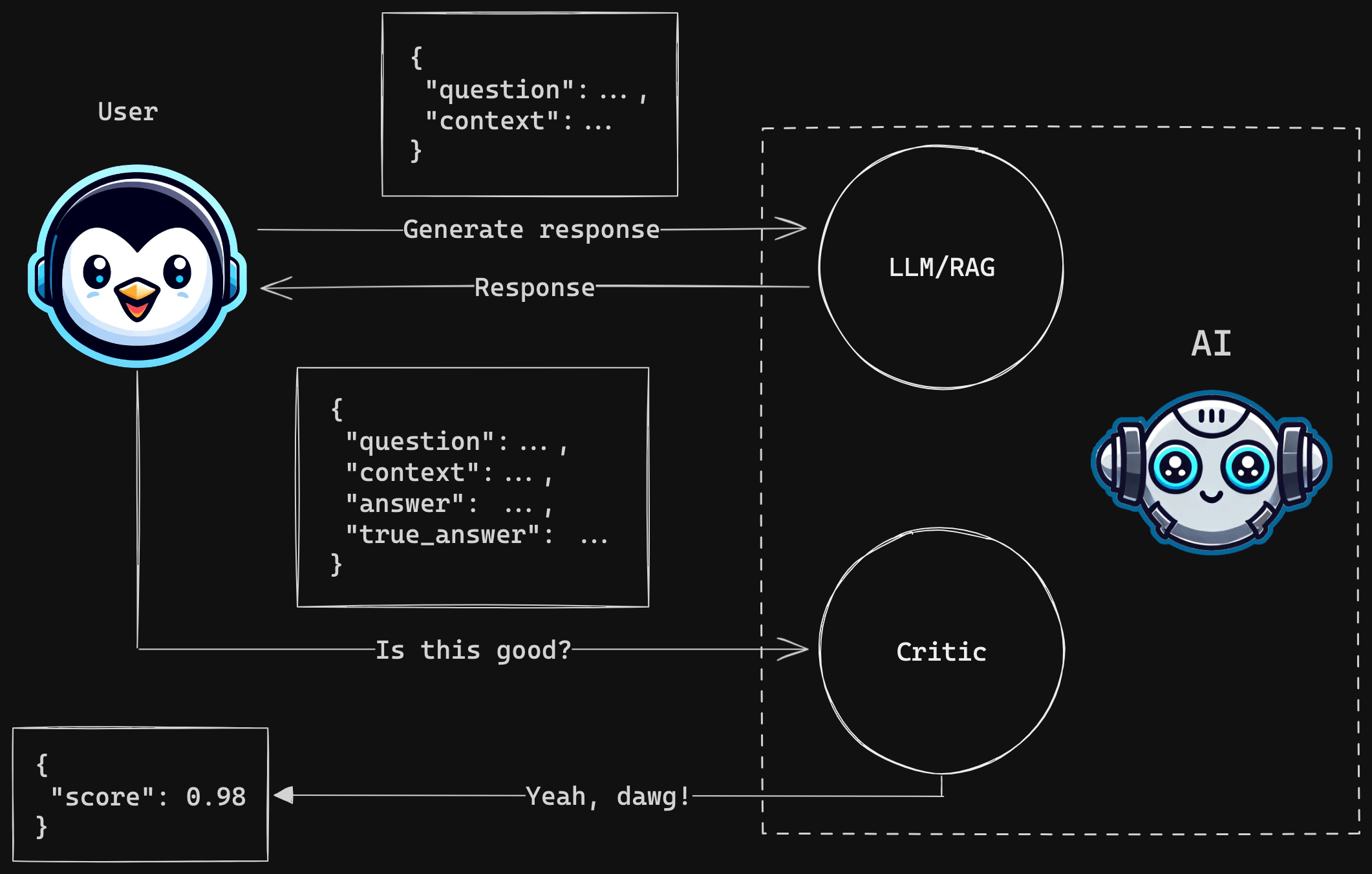

- Create a pipeline for evaluating multiple models