Fine-tuning Llama 3 LLM for RAG

How to make LLMs better for your specific use case?

Are you happy with the performance of your large language model (LLM) on a specific task? If not, fine-tuning might be the answer. Even a simpler, smaller model can outperform a larger one if it’s fine-tuned correctly for a specific task.

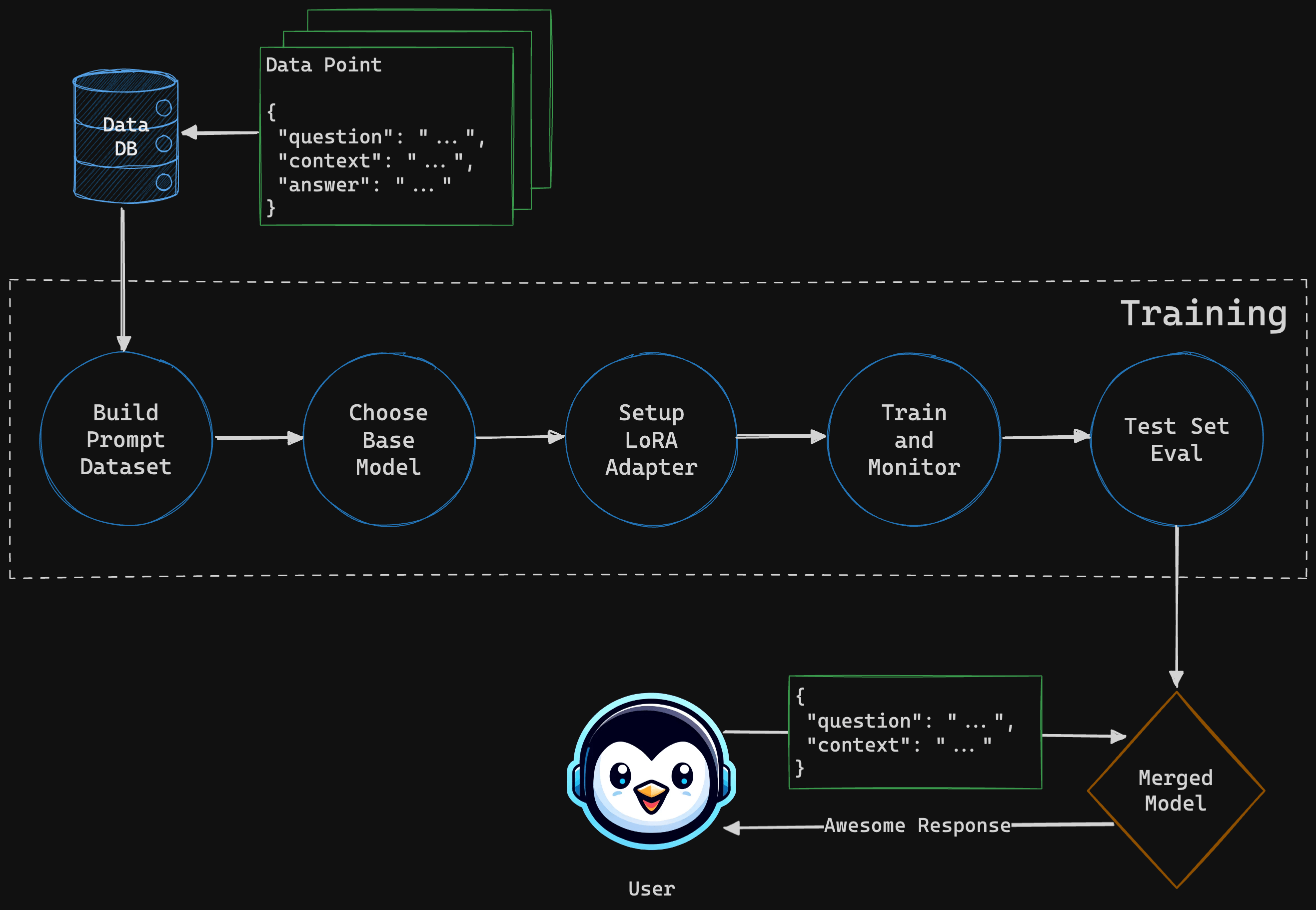

In this tutorial, you’ll learn how to fine-tune a Llama 3 LLM for a financial question-answering (and RAG system) task. We’ll cover:

- Building a complete dataset

- Picking a base model

- Setting up a LoRA adapter

- Training the model

- Evaluating its performance

Let’s get started!

Tutorial Goals

In this tutorial you will:

- Create a dataset for fine-tuning

- Establish baseline with the untrained model

- Setup and monitor the training

- Evaluate the performance of the trained model