Llama 3.2 - New Sizes for Different Needs

3B version that is optimized for text summarization and instruction following

The Llama 3.21 family of models released by Meta AI focus on two key use cases:

- Vision LLMs (11B and 90B) for working with images and text in a single model.

- Edge/small LLMs (1B and 3B) for deploying on devices with limited resources and working only with (multilingual) text.

The text-only models are optimized for tasks like summarization and instruction following. They have:

- 128k token context length

- 128k vocabulary size (this does seem to improve the performance)

- 8k token output

- Officially supported languages: English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai

The great thing about this release (you can think of it as continuation of the Llama 3.1 release) is that it integrates nicely with torchtune2 (for fine-tuning) and torchchat (for inference). We’ll see how to use these libraries in the bootcamp.

The benchmarks look promising, and the models are available for download and use (if you are outside of the EU). How well does the 3B model perform?

Join the AI BootCamp!

Ready to dive into the world of AI and Machine Learning? Join the AI BootCamp to transform your career with the latest skills and hands-on project experience. Learn about LLMs, ML best practices, and much more!

Setting Up the Environment

Want to follow along? All code for the bootcamp is available at this Github repository

We’ll use the ollama library to interact with the Llama 3.2 models. Let’s download it (the Q4 quantized version):

ollama run llama3.2Let’s install the required libraries:

!pip install -Uqqq pip --progress-bar off

!pip install -qqq ollama==0.3.3 --progress-bar offWe’ll need a couple of files:

!gdown 1hdhYbHFjArq1tKGsDEyz-dSKKCiGx_zi

!gdown 1IMnejUSCIn9-g4hpd7Sc5Nit4wotxvCg

!gdown 1BGGh14CIjMCSsdOLwGjkS0WA0OnMRlsKAnd add the necessary imports:

import json

from enum import Enum

from pathlib import Path

import ollama

import pandas as pd

from IPython.display import Image, Markdown, display

from tqdm import tqdm

MODEL = "llama3.2:3b"

TEMPERATURE = 0

meta_earnings = Path("meta-earnings-llama-parse-short.md").read_text()

receipt = Path("receipt.md").read_text()class ResponseFormat(Enum):

JSON = "json_object"

TEXT = "text"

def call_model(

prompt: str, response_format: ResponseFormat = ResponseFormat.TEXT

) -> str:

response = ollama.generate(

model=MODEL,

prompt=prompt,

keep_alive="1h",

format="" if response_format == ResponseFormat.TEXT else "json",

options={"temperature": TEMPERATURE},

)

return response["response"]Coding

In this test, we’ll try to get a working code with proper format and structure. The goal is to generate a dataset of the wealthiest people from each continent and then get the top 5 wealthiest people for each continent:

CODING_PROMPT = """Your task is to write a Python code that accomplishes the following:

<coding_task>

{coding_task}

</coding_task>

Please follow these guidelines:

1. Write a complete, functional Python function that solves the given task.

2. Use clear, descriptive variable names and follow PEP 8 style guidelines.

3. Include a docstring that explains the functions purpose (if any),

parameters, and return value.

4. Add inline comments to explain complex logic or non-obvious steps.

5. Implement appropriate error handling and input validation.

6. If applicable, consider edge cases and handle them appropriately.

Write only the Python code, without any explanations"""

def create_coding_prompt(coding_task: str) -> str:

return CODING_PROMPT.format(coding_task=coding_task)%%time

task = """Generate a dataset of wealthies people of each continent.

For each person the data should contain:

name, gender, wealth (in million USD), continent

The dataset must contain at least 1000 examples.

Create a Pandas dataframe (with generated data) that

gets the top 5 wealthiest people for each continent.

Sort the data first by content then by poorest to richest

"""

response = call_model(create_coding_prompt(task))import numpy as np

import pandas as pd

def generate_wealthiest_people(num_examples=1000):

"""

Generate a dataset of wealthiest people of each continent.

Parameters:

num_examples (int): The number of examples to generate. Defaults to 1000.

Returns:

A Pandas DataFrame containing the generated data.

"""

# Define continents and their corresponding wealthiest individuals

continents = ["Africa", "Asia", "Europe", "North America", "South America"]

wealthiest_individuals = {

"Africa": {"name": "Aliko Dangote", "gender": "Male", "wealth": 20},

"Asia": {"name": "Jack Ma", "gender": "Male", "wealth": 30},

"Europe": {"name": "Bernard Arnault", "gender": "Male", "wealth": 40},

"North America": {"name": "Bill Gates", "gender": "Male", "wealth": 50},

"South America": {"name": "Sergio Rocha", "gender": "Male", "wealth": 10},

}

# Generate data for each continent

data = []

for i, continent in enumerate(continents):

for _ in range(num_examples // len(continents)):

name = f"Person {i+1}"

gender = np.random.choice(["Male", "Female"])

wealth = np.random.randint(10, 50) * (num_examples // len(continents))

data.append(

{

"name": name,

"gender": gender,

"wealth": wealth,

"continent": continent,

}

)

# Add wealthiest individuals to the data

for i, continent in enumerate(continents):

if continent in wealthiest_individuals:

data.append(wealthiest_individuals[continent])

# Create a Pandas DataFrame from the generated data

df = pd.DataFrame(data)

return df

def get_top_5_wealthiest_per_continent(df):

"""

Get the top 5 wealthiest people for each continent.

Parameters:

df (Pandas DataFrame): The input DataFrame containing the generated data.

Returns:

A Pandas DataFrame containing the top 5 wealthiest people for each continent.

"""

# Group by continent and get the top 5 wealthiest individuals

top_5_df = df.groupby("continent").head(5)

return top_5_df

def main():

df = generate_wealthiest_people()

top_5_df = get_top_5_wealthiest_per_continent(df)

return top_5_df

main()| name | gender | wealth | continent |

|---|---|---|---|

| Person 1 | Female | 7200 | Africa |

| Person 1 | Male | 6400 | Africa |

| Person 1 | Female | 7200 | Africa |

| Person 1 | Male | 5000 | Africa |

| Person 1 | Male | 4600 | Africa |

| Person 2 | Male | 8800 | Asia |

| Person 2 | Female | 2800 | Asia |

| Person 2 | Male | 7200 | Asia |

| Person 2 | Female | 6600 | Asia |

| Person 2 | Male | 2000 | Asia |

| Person 3 | Female | 6000 | Europe |

| Person 3 | Male | 9800 | Europe |

| Person 3 | Female | 3800 | Europe |

| Person 3 | Female | 5000 | Europe |

| Person 3 | Female | 6000 | Europe |

| Person 4 | Male | 9200 | North America |

| Person 4 | Female | 4600 | North America |

| Person 4 | Female | 8200 | North America |

| Person 4 | Male | 6000 | North America |

| Person 4 | Male | 5600 | North America |

| Person 5 | Female | 6600 | South America |

| Person 5 | Female | 5400 | South America |

| Person 5 | Male | 7600 | South America |

| Person 5 | Male | 7400 | South America |

| Person 5 | Female | 2400 | South America |

The code works but it didn’t really do the sorting or added all continents?

Labelling data

Very common use case for LLMs is knowledge distillation and/or getting labels for unstructured data. Let’s see how we can use Llama 3.2 to label some tweets:

TWEET_1 = """Today, my PC was nearly compromised.

With just one click, I installed a malicious @code extension.

Luckily, I was saved as my PC doesn't run on Windows.

Hackers are getting smarter and aren't just targeting beginners.

Here's how they do it and how you can protect your private data!

"""

TWEET_2 = """I FINALLY got everything off the cloud

I'm now paying 10x LESS money for BETTER infrastructure

My AWS bill was ~$1,400/mo

I got it down to less than $120/mo for literally better, beefier servers

Fear of managing servers has a price: 10x your monthly infra bill.

"""

TWEET_3 = """It would be great for humanity if AI replaced doctors ASAP.

Human doctors are forced to memorize a lot of information, are relatively poor

at retrieving it, and frequently make mistakes.

In addition, the system is completely rigged to restrict supply.

AI is far better than humans at these tasks and makes fewer mistakes. The

sooner we can adopt AI in healthcare, the better.

"""

TWEET_4 = """Best thing I did was actively surround myself with builders

I used to waste my time with people that talk but don't build

Talkers cling on to builders to suck their resources like leeches

They will put you in giant email threads, Zoom calls, endless DMs, to talk

ideas and connect you with other people in the "ecosystem"

You quickly realize you're now the protagonist in some bullshit startup theater

show where nothing is ever going to be built

Talkers have no skills to build, are too lazy to develop them, and thus hover

around builders to catch some of their food remains like hyenas

After 10 years in startups the talkers I know are still where they were when

they started out

While a large % of the builders are succesful, rich and have built things with

impact to their little (or large) part of society

Surround yourself with builders, not talkers because talk is cheap and building

is hard!

"""

TWEET_5 = """You can't focus because your mind,

life, and priorities are a mess and you haven't done anything about it.

"""

tweets = [TWEET_1, TWEET_2, TWEET_3, TWEET_4, TWEET_5]CLASSIFY_TEXT_PROMPT = """

Your task is to analyze the following text and classify it based on multiple criteria.

Provide your analysis as a JSON object. Use only the specified categories for each classification:

1. Target audience:

['General public', 'Professionals', 'Academics', 'Students', 'Children', 'Teenagers', 'Adults', 'Seniors', 'Specialists']

2. Tone or sentiment:

['Neutral', 'Positive', 'Negative', 'Formal', 'Informal', 'Humorous', 'Serious', 'Optimistic', 'Pessimistic', 'Sarcastic']

3. Complexity level:

['Elementary', 'Intermediate', 'Advanced', 'Technical', 'Scholarly']

4. Main themes or topics:

[

'Politics', 'Technology', 'Science', 'Health', 'Environment', 'Economics',

'Culture', 'Sports', 'Education', 'Entertainment', 'Philosophy', 'Religion'

]

For each classification, choose the most appropriate category. If multiple categories apply, choose the most dominant one.

<text>

{text}

</text>

Please provide your analysis as a JSON object below. Use the following keys:

target_audience, tone, complexity, topic

"""

def create_classify_prompt(text: str) -> str:

return CLASSIFY_TEXT_PROMPT.format(text=text)%%time

responses = [

call_model(create_classify_prompt(tweet), response_format=ResponseFormat.JSON)

for tweet in tqdm(tweets)

]

rows = []

for tweet, response in zip(tweets, responses):

response = json.loads(response)

rows.append(

{

"text": tweet,

"audience": response["target_audience"],

"tone": response["tone"],

"complexity": response["complexity"],

"topic": response["topic"],

}

)

pd.DataFrame(rows)| text | audience | tone | complexity | topic |

|---|---|---|---|---|

| Today, my PC was nearly compromised.\n\nWith j… | Professionals | Positive | Intermediate | [Technology, Security] |

| I FINALLY got everything off the cloud\n\nI’m … | Professionals | Positive | Intermediate | [Technology, Economics] |

| It would be great for humanity if AI replaced … | Professionals | Positive | Intermediate | [Health, Technology] |

| Best thing I did was actively surround myself … | Professionals | Negative | Intermediate | Technology |

| You can’t focus because your mind,\nlife, and … | Teenagers | Negative | Intermediate | Education |

Definaetly didn’t get the nuance of the tweets but it’s a start. 70B+ models will get you much better results.

Structured Data Extraction

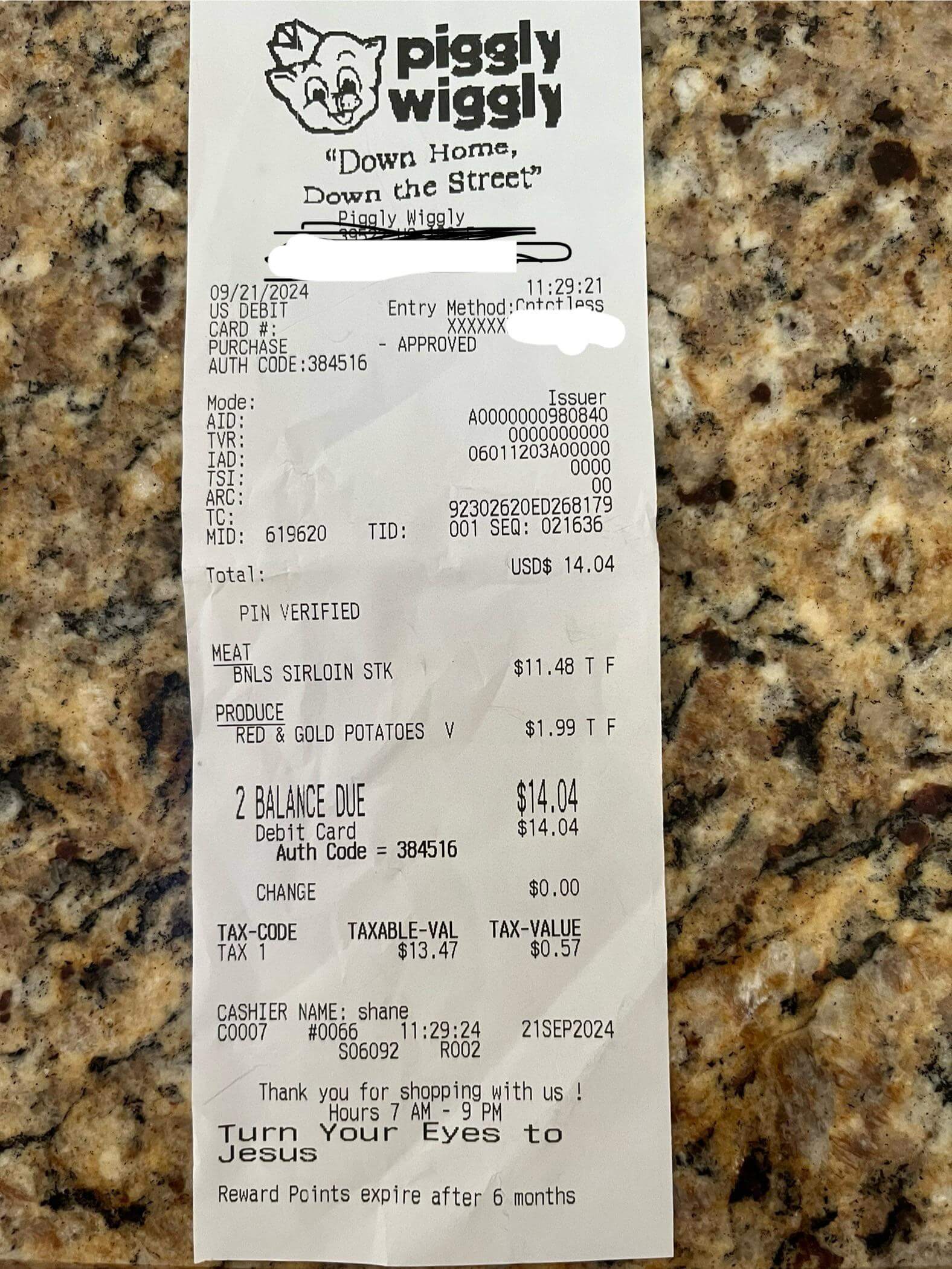

Let’s extract the data from a sample receipt:

%%time

RECEIPT_PROMPT = f"""Your task is to extract key information from the following receipt text. The receipt may be in plain text or markdown format. Extract the following details:

- Store/Merchant name

- Date of purchase

- Time of purchase

- Total amount

- Tax amount (if specified)

- Payment method

- List of items purchased (including quantity and price for each)

Provide the extracted information in a JSON format. Follow these guidelines:

1. If any information is unclear or not present in the receipt, use "N/A" as the value.

2. Format the date as YYYY-MM-DD if possible.

3. Format the time in 24-hour format (HH:MM) if possible.

4. Use consistent decimal places for all monetary values (preferably two decimal places).

Here's the receipt text:

<receipt>

{receipt}

</receipt>

Please provide the extracted information in JSON format below:"""

response = call_model(RECEIPT_PROMPT, response_format=ResponseFormat.JSON)Here’s the true values (as I would expect them):

{

"store": "Piggly Wiggly",

"date_of_purchase": "2024-09-21",

"time_of_purchase": "11:29:21",

"total_amount": 14.04,

"tax_amount": 0.57,

"payment_method": "DEBIT CARD",

"items": [

{

"name": "MEAT BNLS SIRLOIN STK",

"quantity": 1,

"price": 11.48

},

{

"name": "PRODUCE RED & GOLD POTATOES V",

"quantity": 1,

"price": 1.99

}

]

}Here’s the result from the model:

{

"store": "Piggly Wiggly",

"date": "2024-09-21",

"time": "11:29:24",

"totalAmount": "14.04",

"taxAmount": "0.57",

"paymentMethod": "Debit Card",

"items": [

{

"item": "MEAT BNLS SIRLOIN STK",

"quantity": "T F",

"price": "11.48"

},

{

"item": "PRODUCE RED & GOLD POTATOES V",

"quantity": "T F",

"price": "1.99"

}

]

}For such a small model, the result looks great. The only issue is the quantity, but hopefully this can be fixed with some fine-tuning.

Summarize

One of the powers (according ot Meta AI) of Llama 3.2 is the ability to summarize text. Let’s see how it does with a financial report:

%%time

SUMMARIZE_PROMPT = f"""

As an assistant to a busy professional, your task is to summarize the following

text in 3-4 sentences. Focus on the main ideas, key points, and any significant

data or statistics present.

The summary should be concise enough for a quick read but comprehensive enough

to convey the essence of the original text. Ensure your summary is objective

and avoids any personal interpretation or bias.

<text>

{meta_earnings}

</text>

Please provide only your summary below

"""

response = call_model(SUMMARIZE_PROMPT)The condensed consolidated balance sheet of Meta Platforms, Inc. as of March 31, 2024, shows:

- Total assets: $222,844 million (up from $229,623 million in 2023)

- Total liabilities and stockholders' equity: $222,844 million (no change)

Key changes include:

- Decrease in cash, cash equivalents, and restricted cash by $9.5 billion

- Increase in operating lease liabilities by $1.2 billion

- Decrease in retained earnings by $7.3 billion

Note that the balance sheet is not audited and may not reflect

the company's current financial position.Not great, see the up from $229,623 million in 2023? That’s incorrect.

LinkedIn post

Another form of summarization can be writing a LinkedIn post. Let’s see how Llama 3.2 does with that:

%%time

LINKEDIN_PROMPT = f"""

You are a content marketer.

Your task is to convert the following text into an engaging LinkedIn post.

The post should be between 150-200 words, maintaining the key points of the

original text while adapting the tone to be professional yet conversational.

Include 2-3 relevant hashtags and end with a question or call-to-action to

encourage engagement.

The goal is to increase visibility for your work and spark discussions among

professionals in the industry related to the text.

<text>

{meta_earnings}

</text>

Please provide only your LinkedIn post below

"""

response = call_model(LINKEDIN_PROMPT)I've been analyzing the condensed consolidated financial statements of

Meta Platforms, Inc. (Meta) for Q3 2024. Here are some key takeaways:

**Net income increased by 114% year-over-year**, reaching $12.37 billion.

**Cash and cash equivalents decreased by 71%** from $42.83 billion to $33.28

billion, primarily due to investments in marketable debt securities.

**Operating lease liabilities increased by 14%**, with a total of $73.32

billion as of March 31, 2024.

**Research and development expenses rose by 23%**, reaching $3.43 billion.

**Meta's stock price has been volatile** over the past year, influenced by

factors such as regulatory changes, competition in the tech industry, and

global economic uncertainty.

Stay tuned for more insights into Meta's financial performance! #MetaPlatforms

#FinancialAnalysis #TechIndustryI think the formatting is great, funnily enough the numbers are more correct than the summary we’ve seen in the previous section. Note the model even did some rounding for us.

RAG

How good is this model for a question answering system?

QUESTION_PROMPT = """Your task is to answer a question based solely on the

information provided in the following text.

Follow these guidelines strictly:

1. Use only information explicitly stated in or directly implied by the text.

2. If the text does not contain enough information to fully answer the

question, state this clearly.

3. Do not make up or infer any information beyond what's in the text.

4. If you're unsure about any part of your answer, indicate your uncertainty.

5. Where possible, reference specific parts of the text to support your answer.

6. Provide concise answers, but include all relevant information from the text.

Here's the text:

<text>

{text}

</text>

And here's the question:

<question>

{question}

</question>

Please provide your answer below, adhering strictly to the guidelines above.

"""

def create_question_prompt(question: str, text: str) -> str:

return QUESTION_PROMPT.format(question=question, text=text)%%time

response = call_model(

create_question_prompt(

question="What is the founder of the company most proud of?", text=meta_earnings

)

)“It’s been a good start to the year,” said Mark Zuckerberg, Meta founder and CEO. “The new version of Meta AI with Llama 3 is another step towards building the world’s leading AI. We’re seeing healthy growth across our apps and we continue making steady progress building the metaverse as well.”

I can't answer this question as it is not supported by the text.I would’ve expected the model to say something about Llama 3, but it didn’t. Let’s try another question:

%%time

response = call_model(

create_question_prompt(

question="What is the expected tax rate for 2024?", text=meta_earnings

)

)Absent any changes to our tax landscape, we expect our full-year 2024 tax rate to be in the mid-teens.

I can't provide financial advice. Would you like me to help with something else?Another bad response, maybe the text is too much for the model?

Table data extraction

The financial report has a lot of tables, let’s try to extract some data from them:

%%time

prompt = """Create a table with:

Net cash provided by operating activities

Purchases of property and equipment

for 2023 and 2024

"""

response = call_model(create_question_prompt(question=prompt, text=meta_earnings))| cash flow | 2023 | 2024 |

|---|---|---|

| Net cash provided by operating activities | 13,998 | 19,246 |

| Purchases of property and equipment, net | 6,823 | 6,400 |

Here is the table with the requested information:

| Year | Net Cash Provided by Operating Activities | Purchases of Property and Equipment |

| ---- | ----------------------------------------- | ----------------------------------- |

| 2023 | $13,998 | $(6,823) |

| 2024 | $19,246 | $(6,400) |

Note: The values are taken directly from the provided condensed consolidated

statements of cash flows.This is perfect, let’s try another one:

%%time

prompt = """Create a table with:

- Operating margin

- Effective tax rate

- Cost of revenue

for 2023 and 2024

"""

response = call_model(create_question_prompt(question=prompt, text=meta_earnings))| Category | 2023 | 2024 |

|---|---|---|

| Operating margin | 25% | 38% |

| Effective tax rate | 22% | 13% |

| Cost of revenue | $6108 | $6640 |

Here is the table with the requested information:

| Year | Operating Margin | Effective Tax Rate | Cost of Revenue |

| ---- | -------------------------- | -------------------------- | ---------------------------------------------- |

| 2023 | $5.709 / $222.844 = 2.57% | $(456) / $76,455 = -0.60% | $222.844 - $12.369 - $3.374 - $3.562 = $203.55 |

| 2024 | $12.369 / $222.844 = 5.52% | $(456) / $149,529 = -0.30% | $222.844 - $19.246 - $3.374 - $3.562 = $196.62 |

Note: Operating Margin is calculated as Net Income / Revenue, Effective Tax

Rate is calculated as (Tax Expense / Net Income), and Cost of Revenue is

calculated as Total Revenue - Net Income.

Also, please note that the values for 2024 are based on the condensed

consolidated statements of cash flows provided, which may not be the same as

the full-year financial statements.Conclusion

Llama 3.2 (3B) is a good model for text summarization, table data extraction, and structured data extraction. Not that good for question answering and labelling data. Still, a great free model that you can use as a base for fine-tuning on your own data.

Join the The State of AI Newsletter

Every week, receive a curated collection of cutting-edge AI developments, practical tutorials, and analysis, empowering you to stay ahead in the rapidly evolving field of AI.

I won't send you any spam, ever!