Experiment Tracking with DVC

In the realm of machine learning, managing and tracking experiments can swiftly become a complex task, especially as projects scale. The introduction of tools like DVC (Data Version Control) has been a game-changer, allowing developers and data scientists to automate and streamline this process.

In the realm of machine learning, managing and tracking experiments can swiftly become a complex task, especially as projects scale. The introduction of tools like DVC (Data Version Control)1 has been a game-changer, allowing developers and data scientists to automate and streamline this process. Through a practical example involving a Random Forest Classifier trained on the Iris dataset, we'll explore how DVC facilitates a seamless experiment tracking system.

Why Track Experiments?

Imagine you're working on a machine learning project. As your experiments grow in number, so does the difficulty of tracking each one's parameters, metrics, and outcomes. Without proper management, this complexity can lead to significant overhead and confusion, potentially stalling project progress.

DVC offers a solution to this problem by integrating code and data version control, making it simpler to reproduce experiments and track changes across project iterations. The dvclive module, in particular, enables automatic metric logging during model training, which is crucial for comparing experiments and understanding model performance over time.

Basic Tracking with DVCLive

DVCLive2 is a lightweight Python library that integrates seamlessly with DVC to log metrics during model training. By adding a few lines of code to your training script, you can automatically capture key metrics such as accuracy, precision, and recall, and visualize them in the DVC Experiments View.

from dvclive import Livefrom sklearn import datasetsfrom sklearn.ensemble import RandomForestClassifierfrom sklearn.metrics import accuracy_score, average_precision_score, recall_scorefrom sklearn.model_selection import train_test_split

def evaluate(model: RandomForestClassifier, X, y, split: str, live: Live): y_pred = model.predict(X) live.log_metric(f"{split}/accuracy", accuracy_score(y, y_pred)) live.log_metric( f"{split}/average_precision", average_precision_score(y, model.predict_proba(X), average="macro"), ) live.log_metric(f"{split}/recall", recall_score(y, y_pred, average="macro"))

X, y = datasets.load_iris(as_frame=True, return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.2, random_state=42)

with Live("experiments") as live: model = RandomForestClassifier(n_estimators=5, max_depth=1, random_state=42) model.fit(X_train, y_train) evaluate(model, X_train, y_train, "train", live) evaluate(model, X_test, y_test, "test", live)We're training a RandomForestClassifier on the Iris dataset, evaluating its

performance, and logging the results using the dvclive library. Key components

of the code include:

- Loading the Iris dataset: Utilizes

sklearn.datasets.load_iristo fetch the Iris dataset, which is divided into training and testing sets - Model Training: A RandomForestClassifier is instantiated with specific hyperparameters (n_estimators=5, max_depth=1) and trained on the training data

- Evaluation and Logging: The

evaluatefunction is called for both training and test sets. It logs metrics (accuracy, average precision, and recall) using thedvclivelibrary dvcliveIntegration: TheLivecontext manager fromdvcliveis used to initialize a logging environment, which automatically tracks and saves the evaluation metrics

You can run this script using the command python app.py and view the results

with:

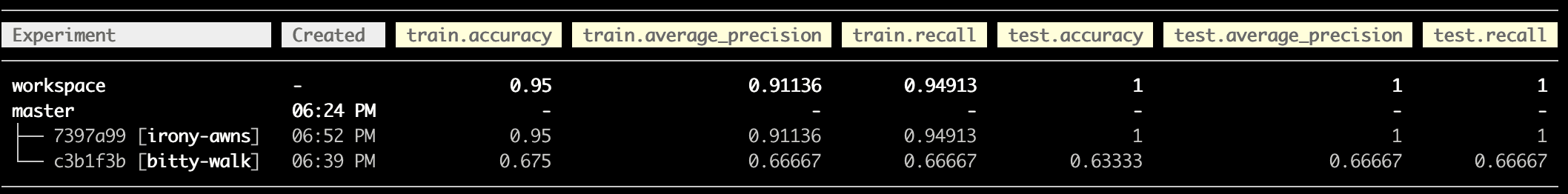

dvc exp show --md| Experiment | train.accuracy | train.average_precision | train.recall | test.accuracy | test.average_precision | test.recall |

|---|---|---|---|---|---|---|

| workspace | 0.675 | 0.66667 | 0.66667 | 0.63333 | 0.66667 | 0.66667 |

| master | - | - | - | - | - | - |

| └── c3b1f3b [bitty-walk] | 0.675 | 0.66667 | 0.66667 | 0.63333 | 0.66667 | 0.66667 |

The table contains our first experiment's results, showing the accuracy, average precision, and recall scores for both the training and test sets.

Let's run another experiment by changing the hyperparameters of the model:

model = RandomForestClassifier(n_estimators=5, max_depth=1, random_state=42)Let's look at the results:

dvc exp show --md| Experiment | train.accuracy | train.average_precision | train.recall | test.accuracy | test.average_precision | test.recall |

|---|---|---|---|---|---|---|

| workspace | 0.95 | 0.91136 | 0.94913 | 1 | 1 | 1 |

| master | - | - | - | - | - | - |

| ├── 7397a99 [irony-awns] | 0.95 | 0.91136 | 0.94913 | 1 | 1 | 1 |

| └── c3b1f3b [bitty-walk] | 0.675 | 0.66667 | 0.66667 | 0.63333 | 0.66667 | 0.66667 |

Our second experiment, with different hyperparameters, shows improved metrics. You can commit these changes to git.

Create a Pipeline

To streamline the experiment tracking process, we can create a DVC pipeline that automates the training and evaluation steps. Another great feature of pipelines is that they allow you to run multiple experiments with different hyperparameters in a single command. This is particularly useful when performing hyperparameter tuning or grid search.

Let's start by creating a params.yaml file to store our model hyperparameters:

model: n_estimators: 10 max_depth: 2Next, we'll modify our training script to read these hyperparameters from the file:

from pathlib import Path

from box import ConfigBoxfrom dvclive import Livefrom ruamel.yaml import YAMLfrom sklearn import datasetsfrom sklearn.ensemble import RandomForestClassifierfrom sklearn.metrics import accuracy_score, average_precision_score, recall_scorefrom sklearn.model_selection import train_test_split

config = ConfigBox(YAML(typ="safe").load(Path("params.yaml").open(encoding="utf-8")))

def evaluate(model: RandomForestClassifier, X, y, split: str, live: Live): y_pred = model.predict(X) live.log_metric(f"{split}/accuracy", accuracy_score(y, y_pred)) live.log_metric( f"{split}/average_precision", average_precision_score(y, model.predict_proba(X), average="macro"), ) live.log_metric(f"{split}/recall", recall_score(y, y_pred, average="macro"))

X, y = datasets.load_iris(as_frame=True, return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.2, random_state=42)

with Live("experiments") as live: model = RandomForestClassifier( n_estimators=config.model.n_estimators, max_depth=config.model.max_depth, random_state=42, ) model.fit(X_train, y_train) evaluate(model, X_train, y_train, "train", live) evaluate(model, X_test, y_test, "test", live)The script now reads the hyperparameters from the params.yaml file and uses

them to train the model. To run the pipeline, we need to define a stage in a

dvc.yaml file:

stages: train_model: cmd: python app.py deps: - app.py params: - modelThis stage runs the app.py script, which trains the model using the

hyperparameters specified in params.yaml. To execute the pipeline, run:

dvc exp run| Experiment | train.accuracy | train.average_precision | train.recall | test.accuracy | test.average_precision | test.recall | model.n_estimators | model.max_depth |

|---|---|---|---|---|---|---|---|---|

| workspace | 0.95 | 0.98784 | 0.94955 | 1 | 1 | 1 | 10 | 2 |

| master | - | - | - | - | - | - | 10 | 2 |

| └── 04301de [funny-cyma] | 0.95 | 0.98784 | 0.94955 | 1 | 1 | 1 | 10 | 2 |

Note that the model hyperparameters are now stored in the experiment metadata, making it easier to track and compare different experiments. Let's try to run another experiment with different hyperparameters:

dvc exp run -S model.n_estimators=30 -S model.max_depth=3| Experiment | train.accuracy | train.average_precision | train.recall | test.accuracy | test.average_precision | test.recall | model.n_estimators | model.max_depth |

|---|---|---|---|---|---|---|---|---|

| workspace | 0.96667 | 0.9972 | 0.96665 | 1 | 1 | 1 | 30 | 3 |

| master | - | - | - | - | - | - | 10 | 2 |

| ├── 83c1c6b [choky-door] | 0.96667 | 0.9972 | 0.96665 | 1 | 1 | 1 | 30 | 3 |

| └── 04301de [funny-cyma] | 0.95 | 0.98784 | 0.94955 | 1 | 1 | 1 | 10 | 2 |

Grid Search

Another powerful feature of DVC is the ability to run grid search experiments.

You can run multiple experiments in parallel using the --queue flag. Here's an

example:

dvc exp run --queue -S model.n_estimators=8,16,32 -S model.max_depth=2,3,5This command will queue nine experiments with different combinations of

n_estimators and max_depth. To run all the queued experiments, use:

dvc exp run --run-allHere's a summary of the grid search results:

| Experiment | train.accuracy | train.average_precision | train.recall | test.accuracy | test.average_precision | test.recall | model.n_estimators | model.max_depth |

|---|---|---|---|---|---|---|---|---|

| workspace | 0.96667 | 0.9972 | 0.96665 | 1 | 1 | 1 | 30 | 3 |

| master | - | - | - | - | - | - | 10 | 2 |

| ├── d37b551 [above-play] | 1 | 1 | 1 | 1 | 1 | 1 | 32 | 5 |

| ├── 00d0021 [nicer-cere] | 0.975 | 0.9968 | 0.97478 | 1 | 1 | 1 | 32 | 3 |

| ├── 2a77fa6 [wacky-bind] | 0.95833 | 0.99328 | 0.9581 | 1 | 1 | 1 | 32 | 2 |

| ├── 004a3e9 [podgy-arcs] | 1 | 1 | 1 | 1 | 1 | 1 | 16 | 5 |

| ├── 8236073 [oared-snow] | 0.96667 | 0.99719 | 0.96623 | 1 | 1 | 1 | 16 | 3 |

| ├── e3edf66 [flown-nosh] | 0.95 | 0.98885 | 0.94955 | 1 | 1 | 1 | 16 | 2 |

| ├── db393d5 [lento-bice] | 0.99167 | 1 | 0.99145 | 1 | 1 | 1 | 8 | 5 |

| ├── 861f1f0 [sedgy-over] | 0.95833 | 0.99562 | 0.95852 | 1 | 1 | 1 | 8 | 3 |

| ├── 9503b13 [fetal-grub] | 0.95833 | 0.98593 | 0.95852 | 1 | 1 | 1 | 8 | 2 |

| ├── 83c1c6b [choky-door] | 0.96667 | 0.9972 | 0.96665 | 1 | 1 | 1 | 30 | 3 |

| └── 04301de [funny-cyma] | 0.95 | 0.98784 | 0.94955 | 1 | 1 | 1 | 10 | 2 |

You can read about other approaches to hyperparameter tuning, such as ranges as values, on the official DVC documentation.

Conclusion

The shift towards using tools like DVC for experiment tracking represents a significant advancement in the field of machine learning. It not only simplifies the management of experiments but also enhances reproducibility and collaboration among team members. By integrating these tools into your workflow, you can dramatically reduce the time spent on experiment management and focus more on innovation and model improvement.